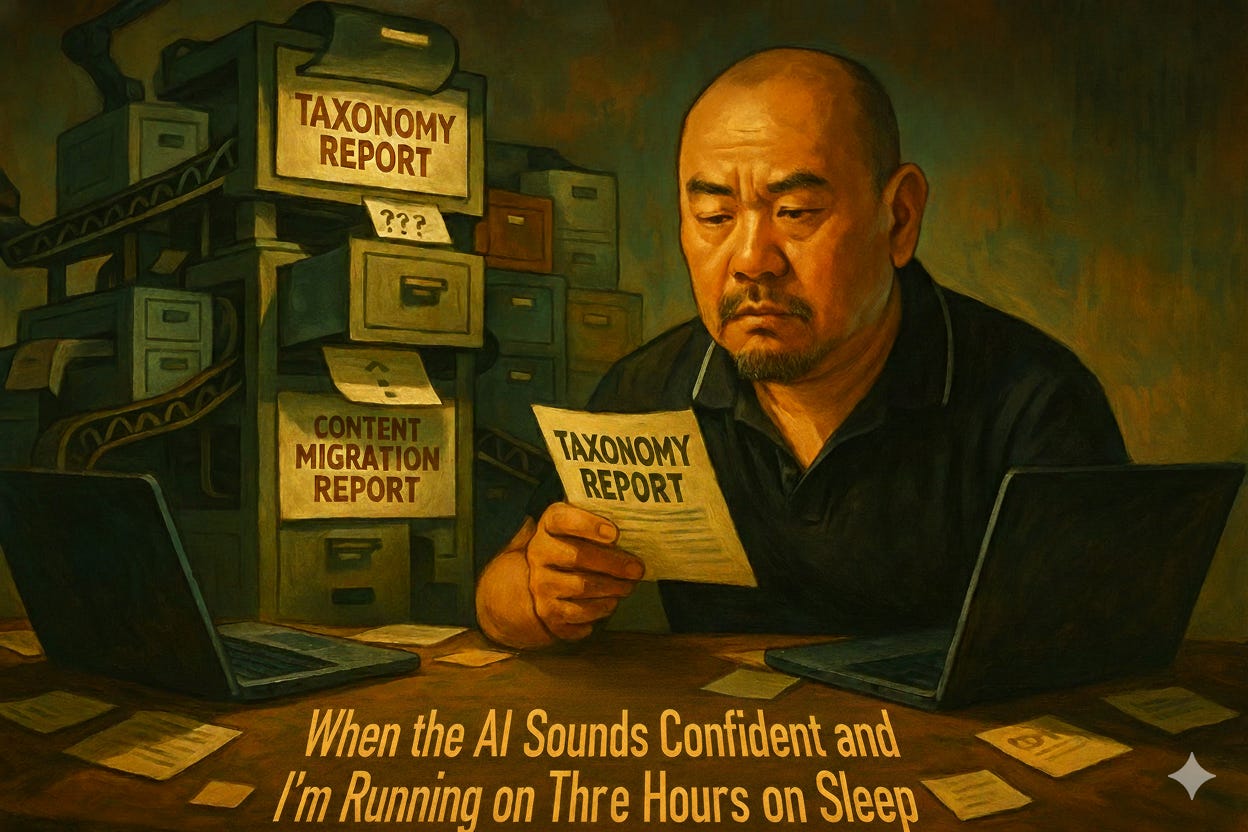

When the AI Sounds Confident and I’m Running on Three Hours of Sleep

Or: Why I Still Have to Fix Its Bullshit at 11 PM

Gemini looked me dead in the eye—metaphorically speaking, since it’s a text interface and doesn’t have eyes… yet—and told me my taxonomy reports were actually content migration reports.

I believed it.

Not immediately, of course. I’m not that gullible. But I’m also working on three hours of sleep, juggling two laptops (one personal, one work, because my new company won’t let me run LittleBird.ai, the privacy nightmare I pay actual money for because my memory is that bad), and voice-memoing myself every 25 minutes like some kind of analog backup system from 1987.

The ADHD kicked in around hour two. I forgot to keep recording. Obviously.

So when Google’s fancy AI told me I needed to write an educational ecosystem document—hadn’t I already done that when I drafted the structural course outline two weeks ago?—I thought: Shit. Maybe I forgot. I mean, I know Bloom’s taxonomy. I’ve built course structures before. But knowing theory and drowning in practice are two different things, and right now I’m face-down in the deep end while theory waves from the shore.

The Part Where I Get a Job (Plot Twist)

Wait, I buried the lede. I got a job. I know, right?

I haven’t said anything because the last time I announced employment, it lasted less than fourteen days, and then I went another 229 days without paid work.1 This one came through a personal referral—which means I’m both grateful and terrified of fucking it up spectacularly. Short-term contract, curriculum development, heavy AI usage. Basically, everything I’ve been doing for free, except now someone’s paying me.

It ends mid-January. If they have a budget, maybe I will stay. If not, no harm, no foul. Fingers crossed, etc.

Anyway. Where was I?

Right. The AI confidently bullshitting me.

The Taxonomy Incident

I asked Gemini to help with boilerplate. Standard stuff. Organizing educational content into categories—taxonomy reports here, content migration reports there, learning outcomes filed under this, assessment frameworks under that.

It did it admirably. Professional. Thorough. Wrong.

Somewhere around day two, I’m writing about learning paths when I realize: wait, didn’t I already write this? Not just part of it—this exact thing. The structure. The categories. The whole goddamn framework.

I stared at my screen, that familiar impostor syndrome crawl starting up my spine. Am I the idiot here? Did I misunderstand my own job?

Then I looked closer.

The AI had sorted “taxonomy reports” and “content migration reports” into completely different buckets, as if they lived in separate universes. Except they don’t. They’re siblings—cousins at minimum. The kind of content that should share organizational DNA, not get exiled to opposite wings of the building.

I know this. I’ve built these systems before.

But the AI was so fucking confident. No hedging. No, “this might not be optimal.” Just clean, authoritative text that said, “trust me, I went to taxonomy college.”

And for maybe an hour, I did trust it. I nodded along. I thought, “Okay, cool, this makes sense.”

Except it never made sense.

The 30% Tax Nobody Talks About

Here’s what the marketing pitch doesn’t tell you: AI tools don’t save time if you have to verify everything they produce. And if you don’t verify, you end up presenting garbage to your boss, which is how you get fired in slow motion.

I’ve started building a 30% refactor buffer into any AI-assisted work. Thirty percent. That’s not a tool making me efficient—that’s an unpaid intern who hallucinates and needs constant supervision.

This isn’t even my first rodeo. A couple of months ago, I asked Claude.ai to build a simple Obsidian plugin for me. Quality-of-life improvement, nothing fancy. What I got was 150,000 lines of enterprise spaghetti code—the Winchester Mystery House of software, complete with staircases to nowhere and rooms that looped back into themselves.

Technically functional. Completely unusable.

That should’ve been my warning.

We’re All Guinea Pigs Now

The thing that keeps me up at night—besides untangling AI-generated taxonomies—is that we’re all running this experiment together. Every tech professional is trying to figure out when to trust the robots and when to grab the wheel. We’re supposed to be “AI-powered” and “10x more productive,” but nobody talks about the hours we spend at midnight, rebuilding the shit the AI confidently fucked up.

And we can’t admit it, can we? Saying “AI made more work for me” feels like admitting you’re bad at the future. Like you’re the guy who couldn’t figure out email in 1997. So we all quietly refactor at 11 PM and pretend everything’s great.

I still think AI is useful. I’m giving a lunch-and-learn next week on how I used it to convince my mom she was being targeted by scammers this summer. Long story. But there’s this gap between “AI is magic” and “AI is the harbinger of death,” and most of us live in that uncomfortable middle ground where it’s both—magic that occasionally tries to kill you, like a cursed artifact that grants wishes but also might strangle you in your sleep.

The AI didn’t know it was wrong. That’s the terrifying part. It delivered garbage taxonomy with the same confidence it would’ve delivered the correct answer. No hesitation. No, “I might be mistaken here.” Just: Here’s your framework. You’re welcome.

Trust, but For God’s Sake, Verify

AI is great at confidence. It’s less great at consequences.

And that’s the part that keeps hitting me in the middle of the night, comparing my memory against a chatbot’s hallucination. The AI never has to explain itself. It never has to answer for the mess. It never has to worry about getting something subtly, professionally, career-jeopardizingly wrong.

I do. I have.

That’s the irony, I guess: the more these tools promise to augment me, the more critical my actual expertise becomes. Not to produce the work—AI can spit that out in seconds—but to decide whether the work is real, whether it holds up, whether it won’t embarrass me in front of a stakeholder who actually knows the difference.

The robots can take the first pass. Hell, they can take the tenth.

But the final call?

That’s still mine.

And I’m learning that trusting AI is incredibly easy.

Verifying it is where the actual job begins.

Which means, it had been a total of 664 days since I last had a job, but who’s counting!? Okay, time to retire that whole thing, like, forever.

Congrats on the job! Hope it leads to more stable income sooner than later!

I read this at a really helpful juncture. Thank you!!!